How we automated our newsletter link curation with n8n, Python and AI

Remember that tedious task you keep putting off because it takes forever but still needs to get done? Yeah, we had one of those. Every week, our teammate Elysse would spend days combing through our Slack channels, clicking on every shared link, figuring out what the hell each one was about, and crafting clever one-liners with emojis for our newsletter (to her credit, she kicked ass at it).

It was soul-crushing work. But it was also important because these curated links are a key part of our weekly newsletter and feed content for our Internet Plumber podcast. So I did what any self-respecting developer would do: I automated the absolute shit out of it.

The manual process that was killing us

Here’s what had to be done every single week:

- Go through our #code, #design, and #ai Slack channels

- Find all the links shared in the past week

- Click on each link (we’re talking 20-30 links here)

- Actually read/understand what the content was about

- Write a witty one-liner that captures the essence

- Pick the perfect emoji to go with it

- Add everything to our Sanity CMS for the newsletter

This wasn’t a one-sitting kind of task either. It was periodic on and off work throughout the week, constantly interrupting other projects. The mental context switching alone was brutal.

Enter the automation stack

I knew we could do better. The solution came in the form of three tools working together:

- n8n for workflow orchestration

- Python for web scraping (in its own container, because I’m fancy like that)

- AI for content understanding and copywriting

Let me break down how these pieces fit together.

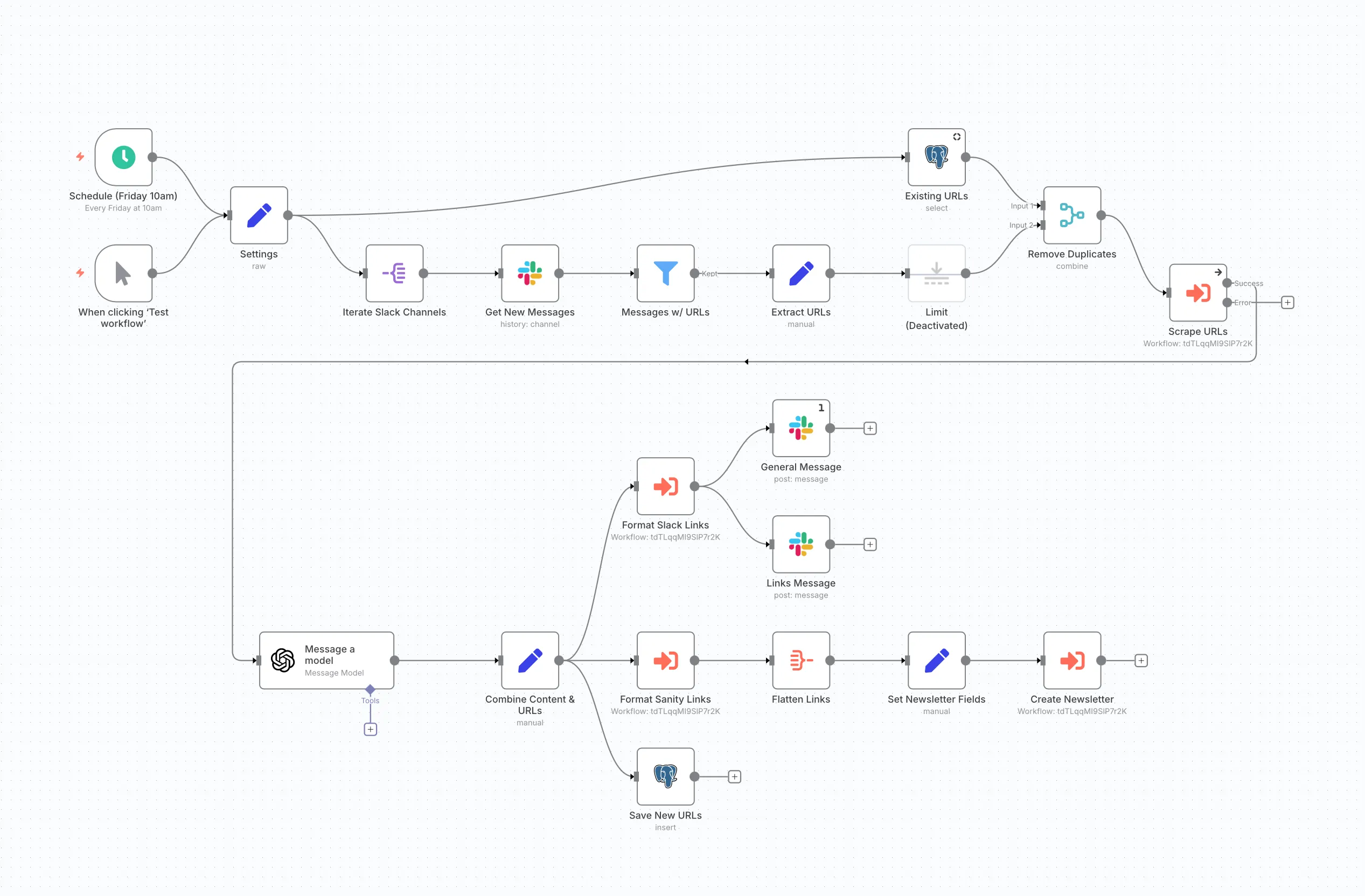

n8n: The conductor

I decided to self host n8n because I like having full control (and also because I’m cheap). Spun the whole thing up inside Docker, which sounds way more impressive than it actually was. If you haven’t used n8n before, think of it as Zapier’s more technical cousin. You know, the one who actually knows how to code.

The workflow starts by connecting to our Slack workspace and scanning the defined channels (#code, #design, #ai) for any messages containing links from the past week. This gives us a nice clean list to work with.

Python: The heavy lifter

Here’s where I got clever (or carried away, depending on how you look at it). Instead of trying to make n8n do everything, I created a dedicated Python container that sits alongside it. From n8n, I just call out to Python whenever I need to do something that n8n can’t handle natively.

For each URL, my Python script:

- Fetches the page content

- Handles all the edge cases (redirects, timeouts, weird encodings)

- Extracts the meaningful content, stripping away navigation, ads, and other cruft

- Hands the clean data back to n8n to continue the workflow

This setup gives me the best of both worlds. n8n handles the visual workflow stuff beautifully, but when I need to drop down and do something specific (like parse some janky HTML), I’ve got Python ready to go. No more trying to shoehorn complex logic into n8n’s nodes.

Websites are messy. Some load content dynamically, others block scrapers, and don’t even get me started on sites that render everything client-side. But with enough error handling and fallbacks, we get usable content from about 95% of links. The other 5%? Well, that’s what the human review is for.

I love me some Python.

AI: The creative brain (and occasional comedian)

Here’s where the magic happens. We feed the scraped content to an AI model with a carefully crafted prompt that basically says: “You’re a witty tech newsletter writer. Summarize this content in one punchy sentence and pick an emoji that captures the vibe.”

The results? Surprisingly good. Sometimes even better than what us humans come up with at 4pm on a Friday. The AI picks up on technical nuance, understands context, and occasionally drops a genuinely funny line. Though it also sometimes thinks a blog post about database optimization deserves a party emoji. 🎉

The beautiful result

What used to take days of scattered work now runs in about 30 seconds. Every Friday morning, our workflow:

- Scans the defined Slack channels

- Finds all shared links

- Scrapes each website

- Generates descriptions and emojis

- Pushes everything to Sanity CMS as a draft for human-in-the-loop review

That last bit is important. We keep a human (yes, its still Elysse) in the loop. The content lands in our CMS as a draft where someone can review it, tweak any descriptions that miss the mark, and ensure nothing weird made it through. It’s the perfect balance of automation and human oversight.

What this means for our team

The time savings are obvious. We’re talking days of work compressed into seconds. But the real win is what the team can do with that reclaimed time. Instead of mind-numbing link clicking, they can spend more time on strategic content and engage with our community.

Plus, the consistency is a game-changer. The automated system never misses a link, never forgets to check a channel, and processes everything with the same level of attention. No more “oh shit, I forgot to check the #ai channel this week” moments.

The gotchas

As usual, it wasn’t all rainbows and unicorns 🦄. Some “fun” challenges we hit:

- Rate limiting: Turns out websites don’t like it when you slam them with requests. Who knew? Had to add delays and actually respect robots.txt like a good citizen

- Dynamic content: Sites that load everything via JavaScript are the bane of my existence. Special handling required, and by special I mean “painful”

- AI hallucinations: Sometimes the AI would completely misunderstand content and write something bonkers. Like describing a serious security vulnerability as “spicy” 🌶️

- Character limits: Newsletter platforms have limits, so descriptions needed to be concise. The AI’s first drafts read like novels

But these are solvable problems. A bit of retry logic here, some prompt engineering there, and we had a mostly robust system. The Docker setup actually helped here because when things inevitably broke, I could debug the Python container separately without taking down the whole workflow.

Should try automation?

If you’re drowning in any repetitive content curation task, absolutely. The stack we used (n8n + Python + AI) is accessible and powerful. You don’t need to be a machine learning expert or a Python wizard to make this work.

The key is starting simple. Maybe you don’t need to scrape websites, just organizing links might be enough. Maybe you don’t need AI, extracting titles and meta descriptions could work. Build the minimum viable automation and expand from there. Trust me, your first version doesn’t need to be perfect. Mine sure as hell wasn’t.

The tools are there. n8n is free to self host, Python containers are easy to spin up, and AI APIs are cheaper than a developer’s hourly rate. The question is: what soul-crushing task are you going to automate next? Because I guarantee you’ve got at least one.

Tired of building the same automations over and over? Our team at Input Logic specializes in workflow automation and custom integrations. Let’s talk about killing your repetitive tasks for good. Ready to take back Friday?