From zero to Spotify in 48 hours: Building an AI country album

I just released a country music EP on Spotify. The whole thing - from concept to live on all major streaming platforms - took 48 hours.

Here’s the thing though. I’ve never recorded music professionally. Hell, I can barely play guitar beyond a few chords. But I wanted to understand what was actually possible with AI tools in 2025, so I created “Dale Tucker” to see how far I could take the idea.

No, this isn’t another “AI is gonna replace all musicians” take. It’s about understanding the tools from a builder’s perspective. What works, what doesn’t, and what you can actually ship.

The challenge

My initial concept and goal was simple: create a complete 6-song EP using only AI tools and basic production software, then distribute it to Spotify, Apple Music, YouTube Music and Amazon Music. Timeline? 48 hours from start to finish.

Why? Because everyone talks about AI capabilities in abstract terms. There’s a lot of blue sky and empty promises. I wanted to actually build something and see where the friction points were. Plus, the idea of becoming a country singer named Dale Tucker for a weekend sounded hilarious.

Spoiler: I succeeded. But not without learning a few things along the way.

Opening the toolbox

Before I could make anything, I needed to figure out which AI tools were actually worth using. Turns out there are a LOT of AI music generation tools out there. Too many.

I tested Udio, Suno, ElevenLabs, Mureka, and Soundraw. Each one promising to be the “game-changer” for AI music. Sound familiar? Just like the Javascript ecosystem I wrote about years ago, AI tools seem to come out daily with everyone claiming theirs is the best.

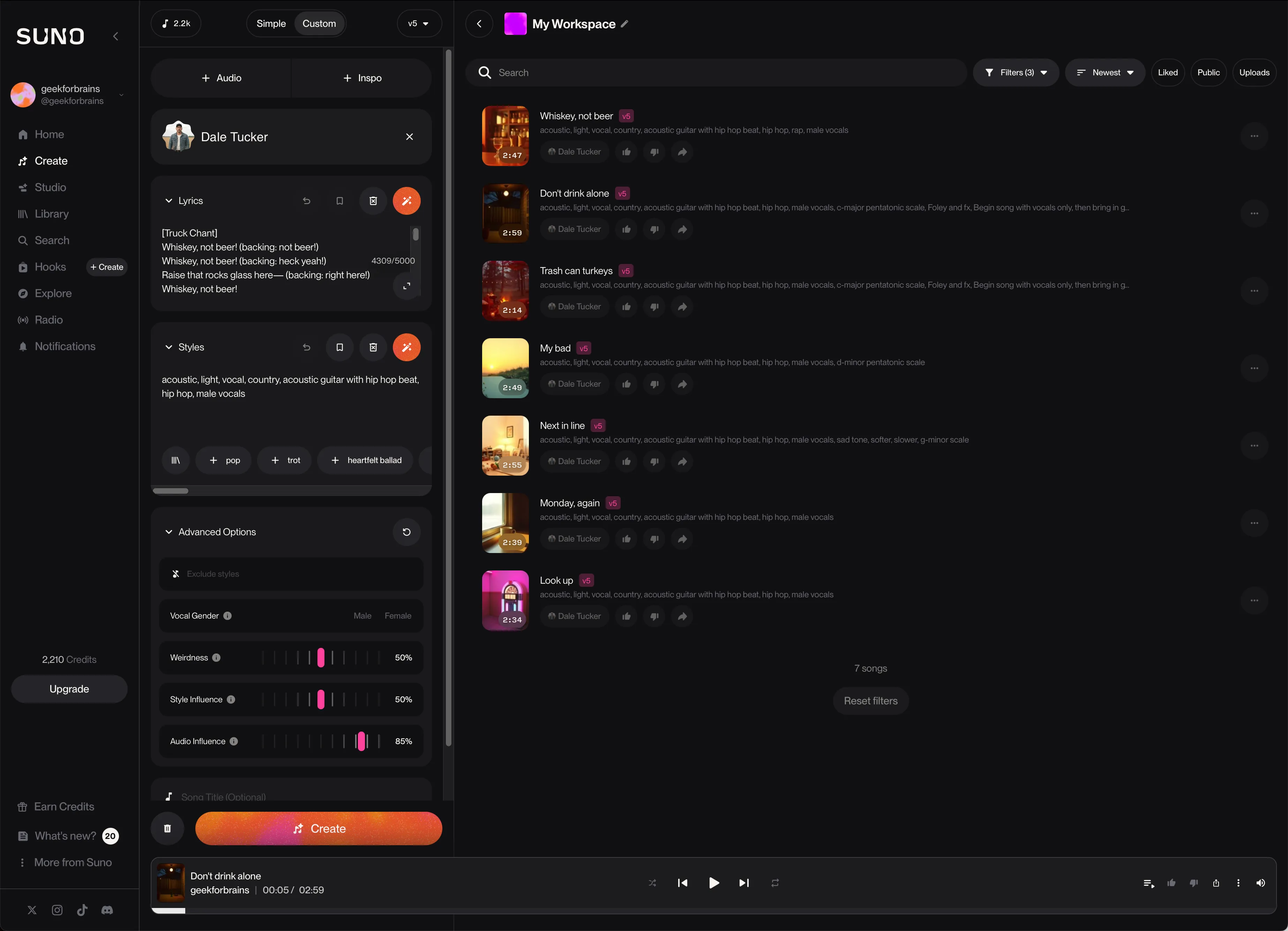

After spending a few hours with each, I settled on ElevenLabs and Suno. ElevenLabs for voice training and generation, Suno for the actual music production. The others either lacked the control I needed, produced inconsistent quality, specialized in background music only (no vocals) or had interfaces that made me want to throw my laptop out a window (looking at you, Soundraw).

This is probably the most important part of the whole experiment - don’t just grab the first tool you find. Test them. Push them. See where they break. I would have wasted way more time if I’d committed to the wrong tools early.

Building Dale Tucker

Once I had my tools, I needed to create a persona. This wasn’t just about making music - I wanted consistency. If I’m going to create a “singer”, that singer needs to sound like the same person across all six songs.

First step was training my voice in ElevenLabs. I recorded maybe 10 minutes of myself talking, reading, singing terribly. The tool analyzes it and creates a voice model. Pretty straightforward. The output quality was surprisingly good - it actually sounded like me, just with better pitch control than I have in real life.

Then I took that audio and used it to train a persona in Suno. The persona gave me vocal consistency, but it took a few songs to dial it in. I’d generate a track, listen to what worked and what didn’t, then adjust. Once the persona felt right, I started combining it with Suno’s style parameters.

This is where it gets interesting. The style parameter lets you define the type of music, vibe, BPM, and even the key or scale you want. I kept the style mostly consistent across all six songs - country/folk aesthetic, specific tempo ranges - but I could adjust the key per song depending on what felt right. One song needed G-minor pentatonic, another worked better in a different key entirely.

That level of control is what keeps AI-generated music from feeling like generic slop. Human-guided AI is powerful. The garbage you hear online is what happens when you let tools run unhinged without direction. My approach was deliberate - test, adjust, iterate, lock in what works.

Could I have skipped ElevenLabs and just used Suno’s default voices? Sure. But then I wouldn’t have learned how to chain tools together or maintain consistency across platforms. That’s the kind of thing that matters when you’re building real products.

Writing songs with ChatGPT

Now for the creative part. I’m not a songwriter. I can write code and technical documentation, but lyrics? That’s a different beast.

The whole project started with a joke. I made some comment about Mondays and coffee in our work Slack, and someone said it sounded like a country song. So “Monday, Again” became the first track. The rest, as you can see, is history.

I started pulling from actual experiences. “Trash Can Turkey” came from a family Thanksgiving camping trip where we literally cooked turkey in an upside-down metal garbage can surrounded by charcoal. “Look Up” was about everyone being glued to their phones - ironic coming from someone in tech, but it bothers me. You go out to eat and nobody’s talking. They’re all just staring at their devices. These tools are supposed to enrich our lives, not overtake them. I find that sad.

I’d write a rough concept, maybe a few lines I thought were decent, then hand it to ChatGPT.

“Make this sound like a country song about X, but keep it authentic, not cheesy.”

The first attempts were garbage. Too generic. Too “AI” sounding. But here’s what I learned - you have to iterate. I’d take ChatGPT’s output, mark what I liked, what felt fake, and ask for revisions. Sometimes this took 10-15 rounds per song. The goal wasn’t to let AI write the song, it was to use AI as a collaborative tool to get to something I’d actually write if I knew how. I think that’s an important distinction.

By song three, I had a process down. Give the tool context about the emotion I wanted, examples of phrases that felt right, and very specific direction about what to avoid. The lyrics ended up feeling genuine because I put in the work to make them mine. This had the surprising effect of me also falling in love with my own country music. Weird…

Production and distribution

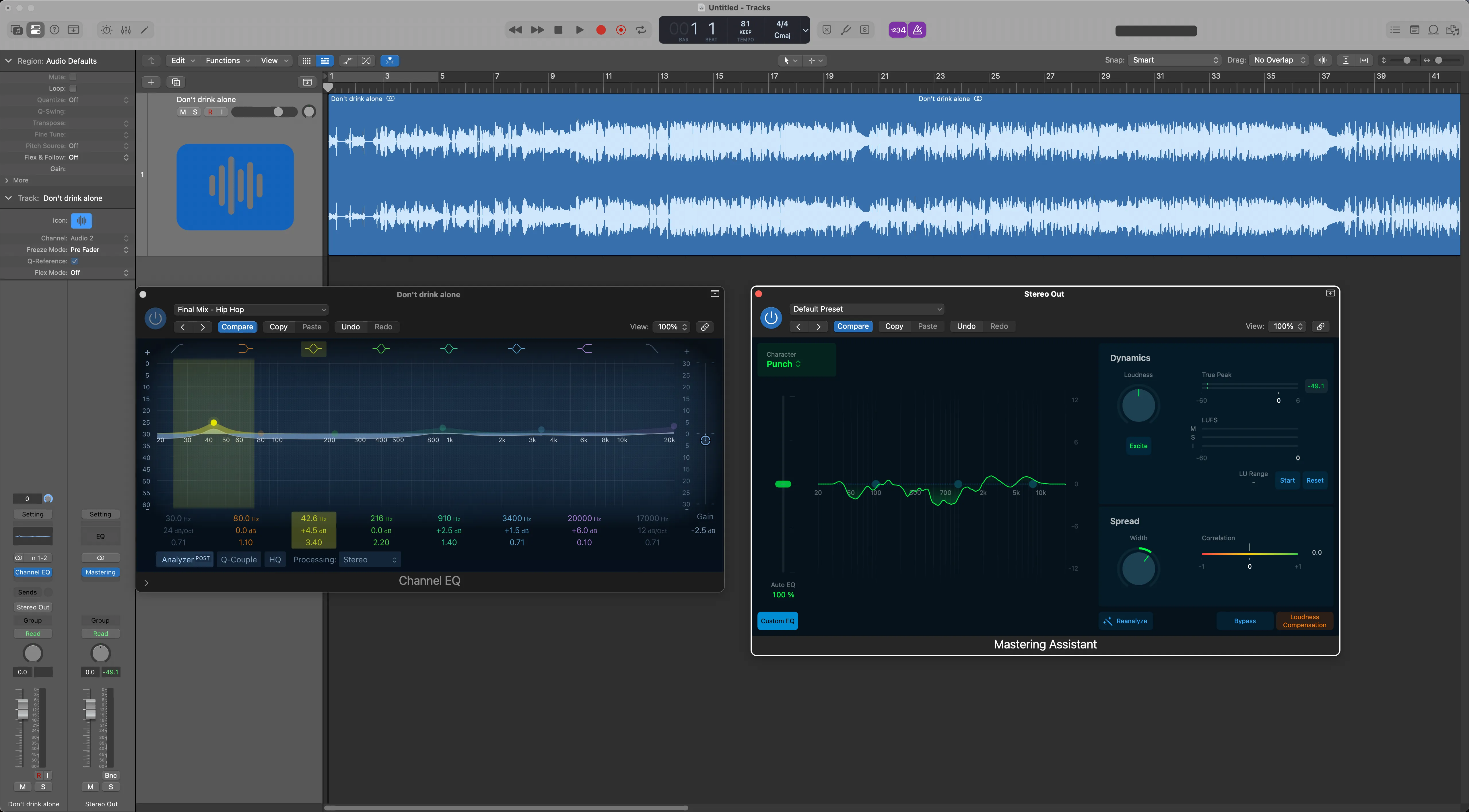

Once I had the six songs generated from Suno, they needed work. AI generates decent tracks, but they’re not mastered. The levels are all over the place. Some have weird artifacts or glitches.

I pulled everything into Logic Pro and spent a few hours EQ’ing and mastering. This is where having some technical audio knowledge helps. Could someone without that experience do it? Probably, but it would take longer. YouTube has enough tutorials to figure it out.

For album art, I used Google’s Gemini “Nano Banana” model. I wanted a photo of me as a cowboy in a wheat field with a guitar. But here’s the smart part - I used an actual photo of myself as reference. This way, if I need more promotional images later, I can maintain consistency by using other photos of myself in different poses. The AI just adds the cowboy aesthetic and environment. AI is notoriously bad at consistent human models.

The final step was distribution. I used DistroKid for this. Upload the tracks, add metadata and lyrics, select which platforms you want, pay a small fee, and you’re done. Within 48 hours, Dale Tucker was live on Spotify, Apple Music, YouTube Music and Amazon Music.

No record label. No producer. No studio time. Just me and a collection of AI tools.

Lets just pause and think about that for a second.

What actually matters here

This experiment wasn’t about proving I could make music. It was about understanding AI tool integration, workflow design, and where the human still matters.

Tool selection is critical. I could have stuck with the first tool I tried and probably produced something. But it would have been worse and taken longer. Take time to evaluate.

Workflow design matters more than individual tools. The fact that I could use ElevenLabs output to train Suno personas? That’s workflow thinking. Most people would use each tool in isolation and miss those integration opportunities.

AI augments, it doesn’t replace. Every song required dozens of decisions from me. Which lyrics to keep, what musical direction to take, how to master the final tracks. The AI did the heavy lifting, but I did the decision making. The “human touch”.

Consistency requires strategy. Using reference photos, trained personas, and specific musical parameters wasn’t accidental. It was necessary to make this feel like a cohesive project rather than random generated content.

Speed to market has fundamentally changed. What used to take months now takes days. That’s not hyperbole. Dale Tucker went from concept to streaming in 48 hours. The implications for rapid prototyping and iteration are massive.

Why this matters for development work

You might be wondering why a tech nerd like me cares about AI music generation. Fair question.

The principles are the same. Whether you’re building a music project or a client app, the skills are identical:

- Rapid prototyping and iteration

- Tool evaluation under pressure

- Workflow integration across platforms

- Understanding where AI helps and where it doesn’t

- Shipping complete projects, not just demos

These “fun” experiments teach you things you can’t learn from documentation. You hit real problems. You have to debug. You have to make trade-offs. And you learn what’s actually possible versus what’s marketing hype.

Plus, clients don’t want to hear about your theoretical AI knowledge. They want to see what you’ve built. Dale Tucker is proof you can take an unfamiliar domain, learn the tools quickly, and ship something complete.

Final thoughts

Did I become a real country singer? No. Is Dale Tucker going to top the charts? Absolutely not. But that was never the point.

The point was to understand what’s actually possible with AI tools in 2025 when you approach them systematically. And the answer is: a lot more than most people think.

I spent two days creating an entire music project from scratch with no prior experience. The tools are good enough now that domain expertise matters less than systematic thinking and willingness to iterate.

Will I make more Dale Tucker songs? Maybe. It was genuinely fun. But more importantly, I learned things about AI implementation that I can apply to actual client work. That’s the real value.

If you’re interested in what AI could do for your project, or if you want to chat about rapid prototyping and tool integration, reach out. Lets build something fun together.

And if you want to hear what 48 hours of AI music generation sounds like, Dale Tucker is on Spotify. Fair warning - it’s definitely country music.